Brandon Walsh has already proposed a session about tools for curating sound, so what I’m proposing here might well fit into his session, but in case what I’m proposing is too different, I wanted to elaborate.

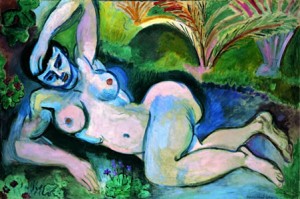

At THATCamp VA 2012, I proposed and then participated in a discussion about how digital tools could help us not just think about tidily marked plain-text files, but also the messier multimedia data of image files, sound files, movie files, etc. We ended up talking at length about commercial tools that search images with other images (for example, Google’s Search By Image) and that search sound with sound (for example, Shazam). A lot of our discussion revolved around the limitations of such tools–yes, we can use them to search images with other images, but, we asked, would a digital tool ever be able to tell that a certain satiric cartoon is meant to represent a certain artwork. For example, would a computer ever be able to tell that this cartoon represents this artwork?

Our conversation was largely speculative (and if anyone wanted to continue it, I’d be happy to have a similar session this time around).

Since then, however, I’ve become involved with a project that takes such thinking beyond speculation. As a participant in the HiPSTAS institute, I’ve been experimenting with ARLO, a tool originally designed to train supercomputers to recognize birdcalls. With it, we can, for example, try to teach the computer to recognize instances of laughter, and have it query all of PennSound, a large archive of poetry recordings, for similar sounds. We might be able, then, to track intentional and unintentional instances when audiences laugh at poetry readings.

The project involves both archivists and scholars–the archivists are interested in adding value to their collections (for example, by identifying instances of song in the StoryCorps archive), and the scholars are interested in how this new tool might help us better visualize and explore poetic sound and historical sound recordings.

My sound-related proposal, then, is this: to have a conversation about potential use cases for this and similar tools. Now that we know we can identify certain kinds of sounds in large sound collections, how should we use such a tool? Since Brandon’s already interested in developing sound collections using Audacity, I thought we might also add this big-data/machine-learning tool into the mix of the conversation.

Trackbacks/Pingbacks